Real-Time Data Insight and Foresight with Analytics: A Case Study for a Large Cellular Network Provider

Learn how Admixer helped an Asian mobile network carrier develop a system to collect, store and analyze data from millions of customers and billions of events to achieve real-time analytics effectiveness.

Does a cellular network company need a real-time data solution?

The short answer: we think yes.

The long answer: we have prepared a dedicated post to answer this question based on our recent experience building an analytics system for an Asian cellular network provider.

Without further intro, let’s get into the “why” question.

Why Invest in Real-Time Data Analytics System?

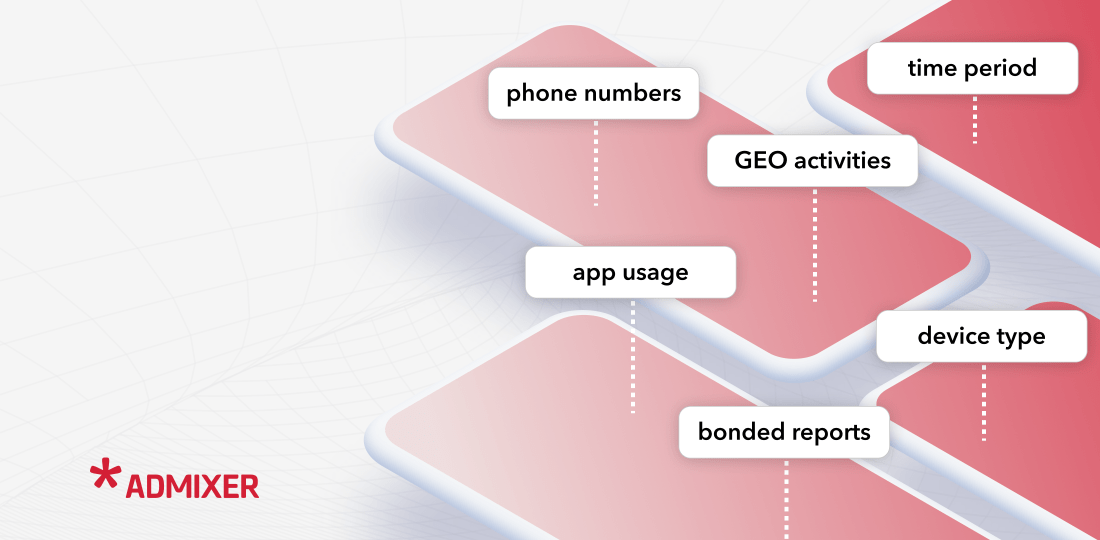

Cellular network providers generate and carry breams of user data: GEO location, device type, phone number, app usage, and many other forms of intelligence.

Everything flows through mobile network providers.

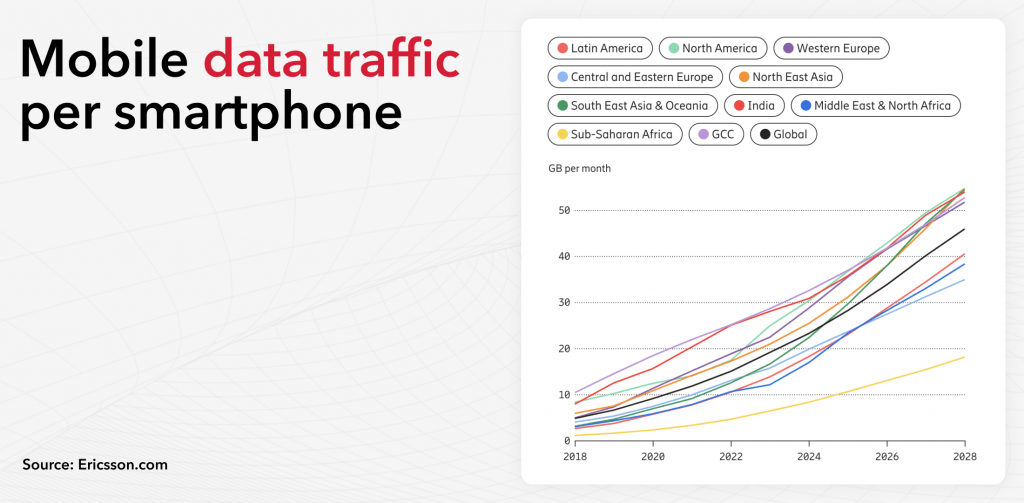

The amount of traffic is growing exponentially every year and for every country (see graph below).

If you manage to harness this data––from targeted advertising to security monitoring and traffic engineering––you can improve your business operations on so many levels.

Below we’ve scratched the surface of the most common use-case scenarios:

#1: Advertise with DMP

Providers can develop their own Data Management Platforms (or DMPs) to leverage their customer data and create targeted marketing campaigns with personalized offers for their customers. By analyzing customer data, they can identify specific demographics, behavioral patterns, or preferences and tailor their promotions accordingly.

#2: Prevent Security Risks

Analyzing internet traffic allows cell providers to detect and mitigate security risks. By monitoring traffic patterns and analyzing anomalies, they can identify potential attacks, intrusions, or malicious activities and take appropriate measures to safeguard their networks and users.

#3: Optimize Traffic Flows

Analytic systems provide insights into traffic flows, allowing cell providers to optimize their network infrastructure. They can identify areas of congestion, reroute traffic, balance load across different network segments, and improve overall network efficiency.

#4: Comply with Regulations

Cell providers may need to comply with regulatory requirements related to network monitoring and reporting. Analytic systems help in capturing and analyzing traffic data, which can be used for reporting purposes and to ensure compliance with relevant regulations.

#5: Protect User Data

It’s important to note that while cell network providers track and scrutinize their web traffic, they must also prioritize user privacy and comply with legal and regulatory frameworks governing data privacy and safety. The right data storage techniques would keep your users’ data and privacy intact with privacy laws and industry standards.

Enter the Case Study: Admixer + Mobile Carrier

The large mobile network carrier struggled to build an analytics system to aggregate, process, and analyze their customer data.

With a growing amount of internet traffic passing through the client’s mobile network, there was an opportunity gap they had no intention of missing.

Someone had to implement a robust analytics system––not just to store massive data but leverage it for timely decision-making.

They hired Admixer.

The big problem? The cellular network carrier wanted to collect data in real-time. They wanted to build a unified data model based on Columnar Database, the open-source database management system for online analytical processing using SQL queries in real time. We are talking about capturing hundreds of gigabits per second of traffic––and not even Petabyte scale, but close to the Exabyte storage layer.

Below we elaborate on the major factors contributing to the project’s complexity:

#1: Data Volume

Cell providers handle a massive amount of internet traffic, which can be challenging to capture, store, and analyze effectively. The sheer volume of data requires robust infrastructure and analytics capabilities to process and derive meaningful insights from the traffic data.

#2: Performance, Real-Time Analysis

Analyzing internet traffic in real time is crucial for detecting and responding to network issues promptly. However, the high-speed nature of network traffic makes real-time analysis complex and demanding. Cell providers need sophisticated tools and algorithms that can handle the continuous flow of data and provide insights in near real-time.

#3: Network Complexity

Cell networks are complex, consisting of multiple technologies, protocols, and network elements. Analyzing traffic across different network segments and technologies, such as 2G, 3G, 4G, and 5G, adds complexity to traffic analysis. Cell providers must account for this complexity and ensure compatibility with various network components and protocols.

#4: Significant Costs

Implementing a system for analyzing internet traffic can involve significant costs for cell providers. From custom development––to implementation and ongoing maintenance, the project takes a solid paycheck to cover all operations. But to give you an idea, you think of costs as the two major factors:

- Hardware costs

Analyzing large volumes of internet traffic requires robust infrastructure, including high-performance servers, storage systems, and network infrastructure.

- Software costs

Cell providers may need to acquire or develop sophisticated analytics software to process and analyze internet traffic data effectively. This includes investing in software licenses, development resources, and ongoing support & maintenance costs.

Laying the Groundwork for the Data Puzzle

Creating such a complex analytics system is no easy task. Before getting started with this big huge project, you need an architectural framework for applying the big data analytics system in the mobile carrier’s business.

Because it’s not just big data––it’s GIGANTIC data.

Even if the solution for the data collection is found, storing such an enormous amount of data is not even a hard task, it’s almost ridiculous. And let alone storing this data, how do you analyze it? And in real-time?

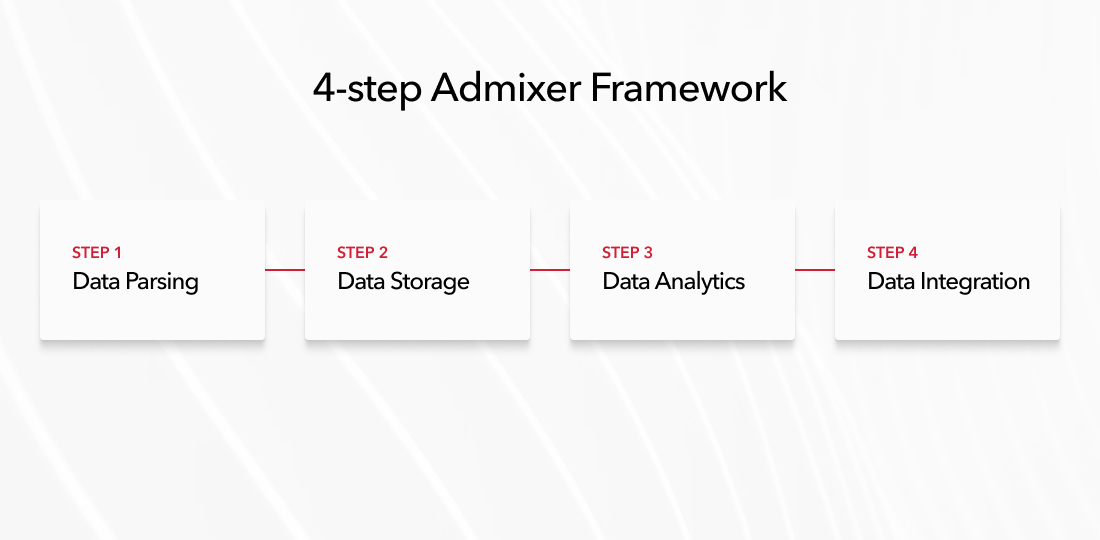

Here at Admixer, we follow a 4-step framework to parse, collect, store, and analyze this amount of data landing in a clear project’s roadmap (see below):

1. Data Parsing. Need to create the data parser and keep only valuable data, skipping all unnecessary data before it goes to storage. Do it for the hundreds of gigabits per second.

2. Data Storage. Need to create storage that can receive and keep user data for the right amount of time (the requirements can be even 6 months). Do it for hundreds of Petabytes of data.

3. Data Analysis. Need to process and analyze stored data enabling the client to generate custom reports based on many parameters:

- Creating reports for phone numbers

- Creating the location activities reports

- Creating the application usage reports

- Creating the bonded report:

- Which phone number/s uses a defined application

- in a defined location

- in a defined time window.

- Which device/s uses a defined application

- in a defined location

- in a defined time window.

- Which phone number/s uses a defined application

4. Data Integration. Creating the Identity Access Management solution (IAM) to integrate the analytics system into a corporate environment with a centralized access control system

For all above, we need to cover the high availability (HA) requirements, which will multiplicate the amount of storage twice. The parsing of the data should cover HA as well, as another component of the system.

How Did We Achieve the Goals and Covered the Requirements for the Large Cellular Network Carrier?

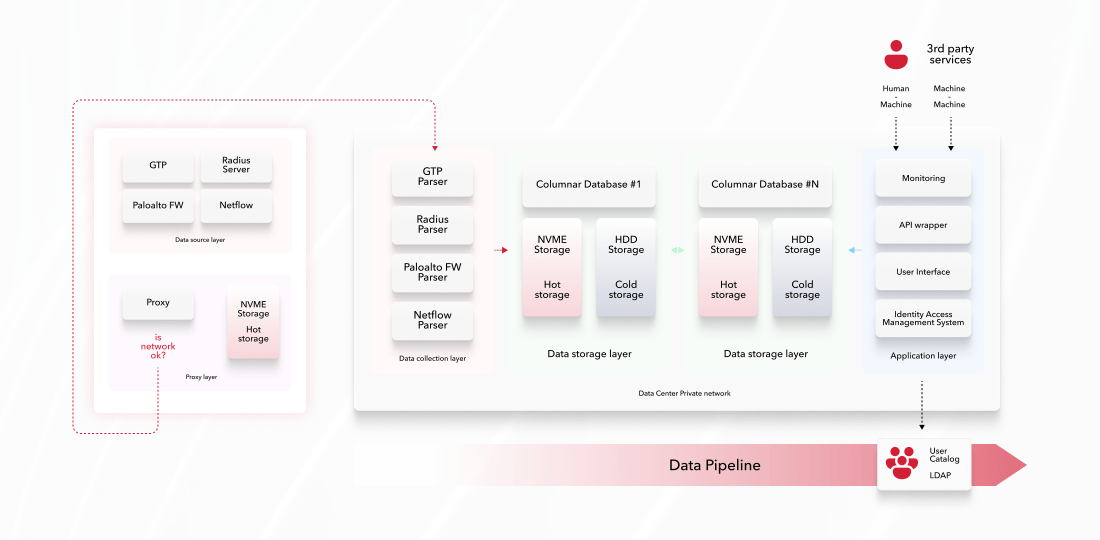

Data generation is outside of Data Center. In other words, Data Sources are remotely accessible over the network. Any remote connection should be designed as Highly Available. And that means that the connection should be duplicated.

But even more so: if the Data Center is unavailable from the remote location perspective, the data should not be lost. To avoid data loss because of the connection between the remote location and the central data center, we have created the opportunity to write data locally till the connection is recovered.

For each remote location, the data should be buffered if Data Center is not available, which led us to create a special proxy service.

Proxy layer

High-Level Architecture Diagram

The proxy service has been created, which is deployed directly at the Data Sources.

This proxy service pulls data from the Data Sources and pushes it to the Data Center. The proxy service has a multi-endpoint connection configuration that puts the data only to live endpoints. It provides us the opportunity to design a High Available Data Collection layer (protocol parsers).

If all Data Collection layer endpoints are not available, the Proxy service starts to write the data locally on storage till the Data Processing layer is available.

After recovery of the availability of the Data Collection layer, the service resumes the pushing of the data to it and pulls the data both: from the Data Sources and from the local storage.

Data Collection layer

Parsers for 4 protocols have been created and written in the Rust language:

#1: Radius parser

The RADIUS (Remote Authentication Dial-In User Service) protocol is a widely used networking protocol for providing centralized authentication, authorization, and accounting (AAA) services. It is commonly used in network access scenarios, such as dial-up and virtual private network (VPN) connections.

#2: GTP parser

The GTP (GPRS Tunneling Protocol) is a network protocol used in mobile networks, specifically in the GSM (Global System for Mobile Communications) and UMTS (Universal Mobile Telecommunications System) standards. It facilitates the transfer of packet-switched data between mobile devices and the core network.

#3: Paloalto firewall logs parser

Palo Alto Networks is a leading provider of next-generation firewalls (NGFW) that offer advanced network security and threat prevention capabilities. Palo Alto firewalls are designed to protect enterprise networks from a wide range of cyber threats, including malware, exploits, and unauthorized access.

#4: Netflow parser

NetFlow is a network protocol used for collecting and analyzing network traffic data. It provides insights into network traffic patterns, assists in troubleshooting network issues, and aids in network capacity planning.

Why did we use Rust? The Rust programming language was used to avoid high-memory usage and avoid the garbage collector. With Rust language, we have created very powerful parsers that can handle such an amount of traffic on reasonable hardware.

Rust provides high-level abstractions, such as iterators and closures, without sacrificing performance. The language’s ownership model and borrow checker allow for efficient memory management and eliminate many runtime overheads associated with garbage collection. Rust has built-in support for safe and efficient concurrency through its ownership and borrowing system. It provides abstractions like threads and asynchronous programming (using async/await), allowing developers to write highly performant concurrent code without sacrificing safety.

Data Storing layer

The cluster of Columnar Database has been deployed by dividing the Hot storage (NVME SSD) and Cold storage (HDD).

Hot storage is a limited set of dimensions and metrics that the client defines as those that need the fastest possible access. Such storage is capable of preparing data in milliseconds or seconds for all time. This is achieved through the correct preparation of the data structure, as well as reduced data sets, on which the search will be carried out and focusing only on the necessary ones.

Cold storage, in turn, is capable of storing huge amounts of data but at a much slower rate of data issuance. All dimensions and metrics are stored in the cold storage for the entire time, which allows, although not quickly (we are talking about minutes and tens of minutes), to get any necessary data.

Such a separation allows for better distribution of read operations to fast NVME disks and store functionality to HDD disks.

How Did We Go About the Data Processing Flow?

“Think of your database as a book library. People stroll down the aisles and scan shelves to pick out the one book that piques their interest. When you search for a book in alphabetical order, it might take you a while to find it. However, once you look for books by your favorite genre, the odds are that you find your preferred book much faster,” –– says Oleksii Koshlatyi, Admixer CTO

Storing information in your database is like sorting books in a library. The data should be easily accessible to improve the performance of SQL requests. The mechanism of Materialized Views on Columnar Database provides opportunities to prepare data on the fly and quickly process only necessary data without the need to scan the whole amount of data and find the needed values.

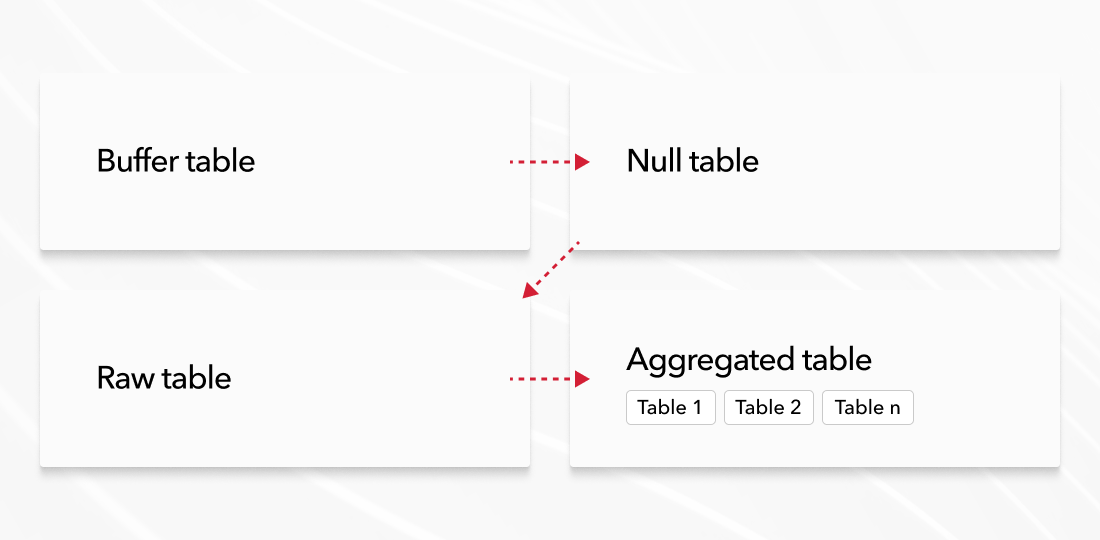

All data is configured initially into a table with the Bufferr engine, which is used to buffer data in order to avoid frequent inserts in the Columnar Database (the maximum recommended number of inserts in the Columnar Database is 1000 per second per server). For each protocol, we allocate a separate buffer.

After buffering, the data is sent to the table with the Null engine. This type of engine is used in cases where we do not need to store the incoming set of events in one place. And on this table, you can set up proofreading using MaterializedViews to other tables in which real data will be stored. MaterializedView is like a trigger for data insertion. After the data enters the table on which the MaterializedView is configured, the data is converted using an SQL query into the necessary ones and inserted into the table where this data will be stored.

The MergeTree engine was chosen to store data in a flat form. This allows you to store data without aggregating it in its original state. MergeTree is the main engine in the Columnar Database and provides a lot of options for working and storing data.

For more efficient data retrieval, it is better not to use large flat tables with many dimensions. For these purposes, it is better to create smaller aggregations with the minimum required set of dimensions and use them for queries. A similar scheme is also built based on MaterializedView and writing data to a specific table.

For such tables, it is desirable to use aggregations, which will allow more efficient storage and processing of data. The Columnar Database uses one of the engines of the MergeTree family – SummingMergeTree – for such purposes.

To get the data fast and quick, it is best to send queries to pre-aggregated tables (see below):

Accessing via the Application Layer

We have developed an application that provides API and UI access to the required reports via the external authentication layer. The existing corporate Authentication Layer––LDAP should be used for the authentication of users into UI.

We have integrated the application layer with the customer’s LDAP server and developed the opportunity to create and manage the roles for the service users (operators, management team) and show the operators’ requests to the analytics system.

Such complicated systems need to be widely monitored. Zabbix & Grafana have been used for monitoring and visualization.

Data Copy penalty

Big Data always gives you a hard time when you transfer information from one place to another. Any storage type has the end resource and durability. Talking about SSD––the resource is pretty much limited.

Most consumer-grade SSD has only 600 full disk write cycles.

The enterprise-grade SSD (for data centers) has 1600 full disk write cycles.

For 100 Gbit per second network traffic, we need to write the following:

100 * 10^9 / 8 * 3600 * 24 = 1.08 * 10^15 (bytes) per day, which is around 1 Petabyte of writing every single day.

10 enterprise-grade SSDs with 4TB of storage each can handle only:

10 * 4 * 1600 = 64000 (TB) of data during their entire life.

For handling all traffic from only 100Gb over one year we need to write 365 PB of data, which is around:

365000 / (4 * 1600) = 57 enterprise SSDs, 4 TB each.

“57 enterprise SSDs every single year!”

This is the reason why we do not use the queues services as intermediate.

Minimizing Data Storage

Before saving the data we need, we must first get rid of unnecessary data. The data preprocessing can significantly decrease the amount of storage that is needed to cover the project needs.

Admixer’s proprietary solution allowed the client to reduce the data storage on the disk from 20 to 1000 times (depending on the proportion of useful data for a certain protocol).

Driving Business Outcomes for the Client

Driven by data and led by technology, Admixer delivered the following results (read below):

✅ Less Costs

Before: Data processing used up extra storage putting a big hurt on the client’s Capital Expenditures.

After: The client benefits from precise insights on ad serving locations and timings; resulting in millions of dollars saved annually from potential lost network connections or missed advertising opportunities. The Rust programming language and optimized algorithms provide us with opportunities to use fewer computing resources and save costs.

✅ Higher Performance

Before: Digging for data in analytics took from several minutes to over 1-hour time period. Other than slow data delivery, some data could be lost in the cracks.

After: The optimized data structure in the Columnar Database provides quick responses over billions of rows, reducing it from minutes (or hours) up to 300 milliseconds. Enhanced performance facilitates requests that are now being handled in near-real time.

✅ Scalable Growth

Before: Their mobile traffic data vollumes were increasing exponentially, resulting in timeouts and system overload.

After: Sharding of the Columnar Database provides opportunities for flexible scaling up and down. The whole analytics system is prepared to handle volatile data volumes proving to be resilient to any kind of scenario in the future.

✅ High Availability

Before: Their Data Center sometimes was unavailable from the remote locations, which resulted in significant data loss.

After: The proxy layer provides opportunities to define the healthiness endpoint of the Data Collection layer and temporarily save data for any disaster. The Data Collection layer is duplicated and distributed over a variety of computing instances.

✅ Enhanced Security

Before: Their business experienced up to 600 incidents per year, caused by server overload or inaccurate data flow.

After: The Application layer provides access to the API with centralized authentication via LDAP (the whole system itself is behind the NAT), reducing the number of incidents from 600 to 50 incidents per year.

What Happened After the Project Finished?

The new developments turned out to be a game-changer for the client’s business. The cellular network provider has opened doors to new opportunities––from running targeted advertising campaigns to an all-around view of their customers and the clear foresight to mitigate any risks related to data security.

“You won’t believe the sheer amount of data that gets generated every single second within our cellular network; it’s beyond what a human can comprehend. But Admixer gave us an actionable plan to transform this major problem into a competitive edge for our business,” said their newly appointed Chief Information Officer.

Ignoring the importance of real-time analytics in your cellular network business could lead to devastating data breaches and clear opportunities missing. If you’re serious about scaling your company, then you need to start implementing sustainable big-data analytics solutions.

P.S. If you’d like to make a strategic decision about your data-driven approaches––get in touch with Admixer’s CTO, Oleksii Koshlatyi––who can audit your high-level data architecture and provide a free perspective on your current analytics system.

Feel free to send him a direct message on LinkedIn.